The Specter of Undefined Behavior

If you’ve ever spoken to a programmer, and really got them on a roll, they may have said the words “undefined behavior” to you. Since you speak English, you probably know what each of those words mean, and can imagine a reasonable meaning for them in that order. But then your programmer friends goes on about “null-pointer dereferencing” and “invariant violations” and you start thinking about cats or football or whatever because you are not a programmer.

I often find myself being asked what it is that I do. Since I’ve spent the last few years working on my Computer Science degree, and have spent much of that time involved in programming language research, I often find myself trying to explain this concept. Unfortunately, when put on the spot, I usually am only able to come up with the usual sort of explanation that programmers use among themselves: “If you invoke undefined behavior, anything can happen! Try to dereference a null pointer? Bam! Lions could emerge from your monitor and eat your family!” Strictly speaking, while I’m sure some compiler writer would implement this behavior if they could, it’s not a good explanation for a person who doesn’t already kind of understand the issues at play.

Today, I’d like to give an explanation of undefined behavior for a lay person. Using examples, I’ll give an intuitive understanding of what it is, and also why we tolerate it. Then I’ll talk about how we go about mitigating it.

Division By Zero

Here is one that most of us know. Tell me, what is 8 / 0? The answer of course is “division by zero is undefined.” In mathematics, there are two sorts of functions: total and partial. A total function is defined for all inputs. If you say a + b, this can be evaluated to some result no matter what you substitute for a and b. Addition is total. The same cannot be said for division. If you say a / b, this can be evaluated to some result no matter what you substitute for a and b unless you substitute b with 0. Division is not total.

If you go to the Wikipedia article for division by zero you’ll find some rationale for why division by zero is undefined. The short version is that if it were defined, then it could be mathematically proven that one equals two. This would of course imply that cats and dogs live in peace together and that pigs fly, and we can’t have that!

However, there is a way we can define division to be total that doesn’t have this issue. Instead of defining division to return a number, we could define division to return a set of numbers. You can think of a set as a collection of things. We write this as a list in curly braces. {this, is, a, set, of, words} I have two cats named Gatito and Moogle. I can have a set of cats by writing {Gatito, Moogle}. Sets can be empty; we call the empty set the null set and can write it as {} or using this symbol ∅. I’ll stick with empty braces because one of the things I hate about mathematics is everybody’s insistence on writing in Greek.

So here is our new total division function:

totalDivide(a, b)

if (b does not equal 0)

output {a / b}

otherwise

output {}

If you use totalDivide to do your division, then you will never have to worry about the undefined behavior of division! So why didn’t Aristotle (or Archimedes or Yoda or whoever invented division) define division like this in the first place? Because it’s super annoying to deal with these sets. None of the other arithmetic functions are defined to take sets, so we’d have to constantly test that the division result did not produce the empty set, and extract the result from the set. In other words: while our division is now total, we still need to treat division by zero as a special case. Let us try to evaluate 2/2 + 2/2 and totalDivide(2,2) + totalDivide(2,2):

1: 2/2 + 2/2

2: 1 + 1

3: 2

Even showing all my work, that took only 3 lines.

1: let {1} = totalDivide(2,2)

2: let {1} = totalDivide(2,2)

3: 1 + 1

4: 2

Since you can’t add two sets, I had to evaluate totalDivide out of line, and extract the values and add them separately. Even this required my human ability to look at the denominator and see that it wasn’t zero for both cases. In other words, making division total made it much more complicated to work with, and it didn’t actually buy us anything. It’s slower. It’s easier to mess up. It has no real value. As humans, it’s fairly easy for us to look at the denominator, see that it’s zero, and just say “undefined.”

Cartons of Eggs

I’m sure many of you have a carton of eggs in your fridge. Go get me the 17th egg from your carton of eggs. Some of you will be able to do this, and some of you will not. Maybe you only have a 12 egg carton. Maybe you only have 4 eggs in your 18 egg carton, and the 17th egg is one of the ones that are missing. Maybe you’re vegan.

A basic sort of construct in programming is called an “array.” Basically, this is a collection of the same sort of things packed together in a region of memory on your computer. You can think of a carton of eggs as an array of eggs. The carton only contains one sort of thing: an egg. The eggs are all packed together right next to each other with nothing in between. There is some finite amount of eggs.

If I told you “for each egg in the carton, take it out and crack it, and dump it in a bowl starting with the first egg”, you would be able to do this. If I told you “take the 7th egg and throw it at your neighbor’s house” you would be able to do this. In the first example, you would notice when you cracked the last egg. In the second example you would make sure that there was a 7th egg, and if there wasn’t you probably picked some other egg because your neighbor is probably a jerk who deserves to have his house egged. You did this unconsciously because you are a human who can react to dynamic situations. The computer can’t do this.

If you have some array that looks like this (array locations are separated by | bars | and * stars * are outside the array) ***|1|2|3|*** and you told the computer “for each location in the array, add 1 to the number, starting at the first location” it would set the first location to be 2, the second location to be 3, the third location to be 4. Then it would interpret the bits in the location of memory directly to the right of the third location as a number, and it would add 1 to this “number” thereby destroying the data in that location. It would do this forever because this is what you told the machine to do. Suppose that part of memory was involved in controlling the brakes in your 2010 era Toyota vehicle. This is obviously incredibly bad, so how do we prevent this?

The answer is that the programmer (hopefully) knows how big the array is and actually says “starting at location one, for the next 3 locations, add one to the number in the location”. But suppose the programmer messes up, and accidentally says “for the next 4 locations” and costs a multinational company billions of dollars? We could prevent this. There are programming languages that give us ways to prevent these situations. “High level” programming languages such as Java have built-in ways to tell how long an array is. They are also designed to prevent the programmer from telling the machine to write past the end of the array. In Java, the program will successfully write |2|3|4| and then it will crash, rather than corrupting the data outside of the array. This crash will be noticed in testing, and Toyota will save face. We also have “low level” programming languages such as C, which don’t do this. Why do we use low level programming languages? Let’s step through what these languages actually have the machine do for “starting at location one, for the next 3 locations, add one to the number in the location”: First the C program:

NOTE: location[some value] is shorthand for “the location identified by some value.” egg_carton[3] is the third egg in the carton. Additionally, you should read these as sequential instructions “first do this, then do that” Finally, these examples are greatly simplified for the purposes of this article.

1: counter = 1

2: location[counter] = 1 + 1

3: if (counter equals 3) terminate

4: counter = 2

5: location[counter] = 2 + 1

6: if (counter equals 3) terminate

7: counter = 3

8: location[count] = 3 + 1

9: if (counter equals 3) terminate

Very roughly speaking, this is what the computer does. The programmer will use a counter to keep track of their location in the array. After updating each location, they will test the counter to see if they should stop. If they keep going they will repeat this process until the stop condition is satisfied. The Java programmer would write mostly the same program, but the program that translates the Java code into machine code (called a compiler) will add some stuff:

1: counter = 1

2: if (counter greater than array length) crash

3: location[counter] = 1 + 1

4: if (counter equals 3) terminate

5: counter = 2

6: if (counter greater than array length) crash

7: location[counter] = 2 + 1

8: if (counter equals 3) terminate

9: counter = 3

10: if (counter greater than array length) crash

11: location[count] = 3 + 1

12: if (counter equals 3) terminate

As you can see, 3 extra lines were added. If you know for a fact that the array you are working with has a length that is greater than or equal to three, then this code is redundant.

For such a small array, this might not be a huge deal, but suppose the array was a billion elements. Suddenly an extra billion instructions were added. Your phone’s processor likely runs at 1-3 gigahertz, which means that it has an internal clock that ticks 1-3 billion times per second. The smallest amount of time that an instruction can take is one clock cycle, which means that in the best case scenario, the java program takes one entire second longer to complete. The fact of the matter is that “if (counter greater than array length) crash” definitely takes longer than one clock cycle to complete. For a game on your phone, this extra second may be acceptable. For the onboard computer in your car, it is definitely not. Imagine if your brakes took an extra second to engage after you push the pedal? Congressmen would get involved!

In Java, reading off the end of an array is defined. The language defines that if you attempt to do this, the program will crash (it actually does something similar but not the same, but this is outside the scope of this article). In order to enforce this definition, it inserts these extra instructions into the program that implement the functionality. In C, reading off the end of an array is undefined. Since C doesn’t care what happens when you read off the end of an array, it doesn’t add any code to your program. C assume you know what you’re doing, and have taken the necessary steps to ensure your program is correct. The result is that the C program is much faster than the Java program.

There are many such undefined behaviors in programming. For instance, your computer’s division function is partial just like the mathematical version. Java will test that the denominator isn’t zero, and crash if it is. C happily tells the machine to evaluate 8 / 0. Most processors will actually go into a failure state if you attempt to divide by zero, and most operating systems (such as Windows or Mac OSX) will crash your program to recover from the fault. However, there is no law that says this must happen. I could very well create a processor that sends lions to your house to punish you for trying to divide by zero. I could define x / 0 = 17. The C language committee would be perfectly fine with either solution; they just don’t care. This is why people often call languages such as C “unsafe.” This doesn’t mean that they are bad necessarily, just that their use requires caution. A chainsaw is unsafe, but it is a very powerful tool when used correctly. When used incorrectly, it will slice your face off.

What To Do

So, if defining every behavior is slow, but leaving it undefined is dangerous, what should we do? Well, the fact of the matter is that in most cases, the added overhead of adding these extra instructions is acceptable. In these cases, “safe” languages such as Java are preferred because they ensure program correctness. Some people will still write these sorts of programs in unsafe languages such as C (for instance, my own DMP Photobooth is implemented in C), but strictly speaking there are better options. This is part of the explanation for the phenomenon that “computers get faster every year, but [insert program] is just as slow as ever!” Since the performance of [insert program] we deemed to be “good enough”, this extra processing power is instead being devoted to program correctness. If you’ve ever used older versions of Windows, then you know that your programs not constantly crashing is a Good Thing.

This is fine and good for those programs, but what about the ones that cannot afford this luxury? These other programs fall into a few general categories, two of which we’ll call “real-time” and “big data.” These are buzzwords that you’ve likely heard before, “big data” programs are the programs that actually process one billion element arrays. An example of this sort of software would be software that is run by a financial company. Financial companies have billions of transactions per day, and these transactions need to post as quickly as possible. (suppose you deposit a check, you want those funds to be available as quickly as possible) These companies need all the speed they can get, and all those extra instructions dedicated to totality are holding up the show.

Meanwhile “real-time” applications have operations that absolutely must complete in a set amount of time. Suppose I’m flying a jet, and I push the button to raise a wing flap. That button triggers an operation in the program running on the flight computer, and if that operation doesn’t complete immediately (where “immediately” is some fixed, non-zero-but-really-small amount of time) then that program is not correct. In these cases, the programmer needs to have very precise control over what instructions are produced, and they need to make every instruction count. In these cases, redundant totality checks are a luxury that is not in the budget.

Real-time and big data programs need to be fast, so they are often implemented in unsafe languages, but that does not mean that invoking undefined behavior is OK. If a financial company sets your account balance to be check value / 0, you are not going to have a good day. If your car reads the braking strength from a location off to the right of the braking strength array, you are going to die. So, what do these sorts of programs do?

One very common method, often used in safety-critical software such as a car’s onboard computer is to employ strict coding standards. MISRA C is a set of guidelines for programming in C to help ensure program correctness. Such guidelines instruct the developer on how to program to avoid unsafe behavior. Enforcement of the guidelines is ensured by peer-review, software testing, and Static program analysis.

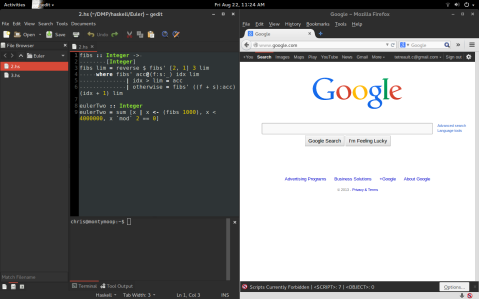

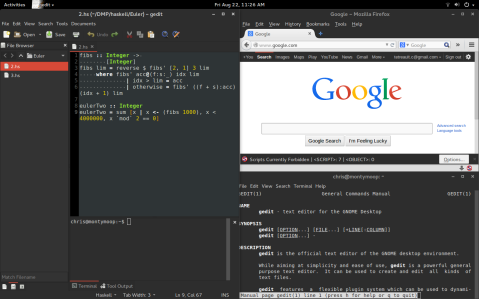

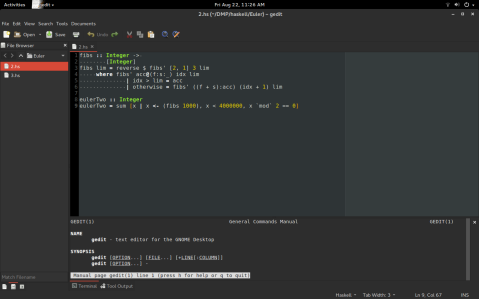

Static program analysis (or just static analysis) is the process of running a program on a codebase to check it for defects. For MISRA C, there exists tooling to ensure compliance with its guidelines. Static analysis can also be more general. Over the last year or so, I’ve been assisting with a research project at UCSD called Liquid Haskell. Simply put, Liquid Haskell provides the programmer with ways to specify requirements about the inputs and outputs of a piece of code. Liquid Haskell could ensure the correct usage of division by specifying a “precondition” that “the denominator must not equal zero.” (I believe that this actually comes for free if you use Liquid Haskell as part of its basic built-in checks) After specifying the precondition, the tool will check your codebase, find all uses of division, and ensure that you ensured that zero will never be used as the denominator.

It does this by determining where the denominator value came from. If the denominator is some literal (i.e. the number 7, and not some variable a that can take on multiple values), it will examine the literal and ensure it meets the precondition of division. If the number is an input to the current routine, it will ensure the routine has a precondition on that value that it not be zero. If the number is the output from some other routine, it verifies that the the routine that produced the value has, as a “postcondition”, that its result will never be zero. If the check passes for all usages of division, your use of division will be declared safe. If the check fails, it will tell you what usages were unsafe, and you will be able to fix it before your program goes live. The Haskell programming language is very safe to begin with, but a Haskell program verified by Liquid Haskell is practically Fort Knox!

The Human Factor

Humans are imperfect, we make mistakes. However, we make up for it in our ability to respond to dynamic situations. A human would never fail to grab the 259th egg from a 12 egg carton and crack it into a bowl; the human wouldn’t even try. The human can see that there is only 12 eggs without having to be told to do so, and will respond accordingly. Machines do not make mistakes, they do exactly what you tell them to, exactly how you told them to do it. If you tell the machine to grab the 259th egg and crack it into a bowl, it will reach it’s hand down, grab whatever is in the space 258 egg lengths to the right of the first egg, and smash it on the edge of a mixing bowl. You can only hope that nothing valuable was in that spot.

Most people don’t necessarily have a strong intuition for what “undefined behavior” is, but mathematicians and programmers everywhere fight this battle every day.